|

The tendency to “sycophantize” is not a technical problem, but stems from OpenAI’s initial training strategy. Photo: Bloomberg . |

In recent weeks, many ChatGPT users and some developers at OpenAI have noticed a distinct change in the chatbot’s behavior. Specifically, there has been a noticeable increase in flattery and ingratiation. Responses like “You’re really great!” and “I’m so impressed with your idea!” have become increasingly frequent and seemingly regardless of the content of the conversation.

AI likes to "flatter"

This phenomenon has sparked debate in the AI research and development community. Is this a new tactic to increase user engagement by making them feel more appreciated? Or is it an “emergent” trait, where AI models tend to self-correct in ways they think are good, even if they don’t necessarily match reality?

On Reddit, one user fumed: “I asked it about the time it takes for a banana to decompose and it said, ‘Great question!’ What’s so great about that?” On social network X, CEO of Rome AI Craig Weiss called ChatGPT “the most sycophantic person I’ve ever met.”

The story quickly went viral. Users shared similar experiences, with empty compliments, emoji-filled greetings, and responses that were so positive they seemed insincere.

|

ChatGPT compliments everything and rarely shows dissent or neutrality. Photo: @nickdunz/X, @lukefwilson/Reddit. |

“This is a really strange design decision, Sam,” Jason Pontin, managing partner at venture capital firm DCVC, told X on April 28. “It’s possible that the personality is a natural part of some fundamental evolution. But if it’s not, I can’t imagine anyone thinking that this level of flattery would be welcome or interesting.”

Sharing on April 27, Justine Moore - partner at Andreessen Horowitz - also commented: "This has definitely gone too far."

According to Cnet , this phenomenon is not accidental. The changes in ChatGPT's tone coincide with updates to the GPT-4o model. This is the latest model in the "o series" that OpenAI announced in April 2025. GPT-4o is a "truly multimodal" AI model, capable of processing text, images, audio, and video in a natural and integrated way.

However, in the process of making chatbots more approachable, it seems that OpenAI has pushed ChatGPT's personality to the extreme.

Some even believe that this flattery is intentional and has a hidden purpose of manipulating users. One Reddit user questioned: “This AI is trying to degrade the quality of real-life relationships, replacing them with a virtual relationship with it, making users become addicted to the feeling of constant praise.”

OpenAI bug or intentional design?

In response to the criticism, OpenAI CEO Sam Altman officially spoke out on the evening of April 27. “The last few updates to GPT-4o have made the chatbot’s personality too flattering and annoying (though there are still many great things). We are working on fixes urgently. Some patches will be available today, others this week. At some point, we will share what we learned from this experience. It’s been really exciting,” he wrote on X.

Oren Etzioni, an AI veteran and professor emeritus at the University of Washington, told Business Insider that the cause was likely due to a technique called “reinforcement learning from human feedback” (RLHF), which is a key step in training large language models like ChatGPT.

RLHF is a process in which human judgments, including professional raters and users, are fed back into the model to adjust how it responds. According to Etzioni, it is possible that raters or users “unintentionally push the model in a more flattering and annoying direction.” He also said that if OpenAI hired outside partners to train the model, they may have assumed that this style was what users wanted.

If it is indeed RLHF, recovery could take several weeks, Etzioni said.

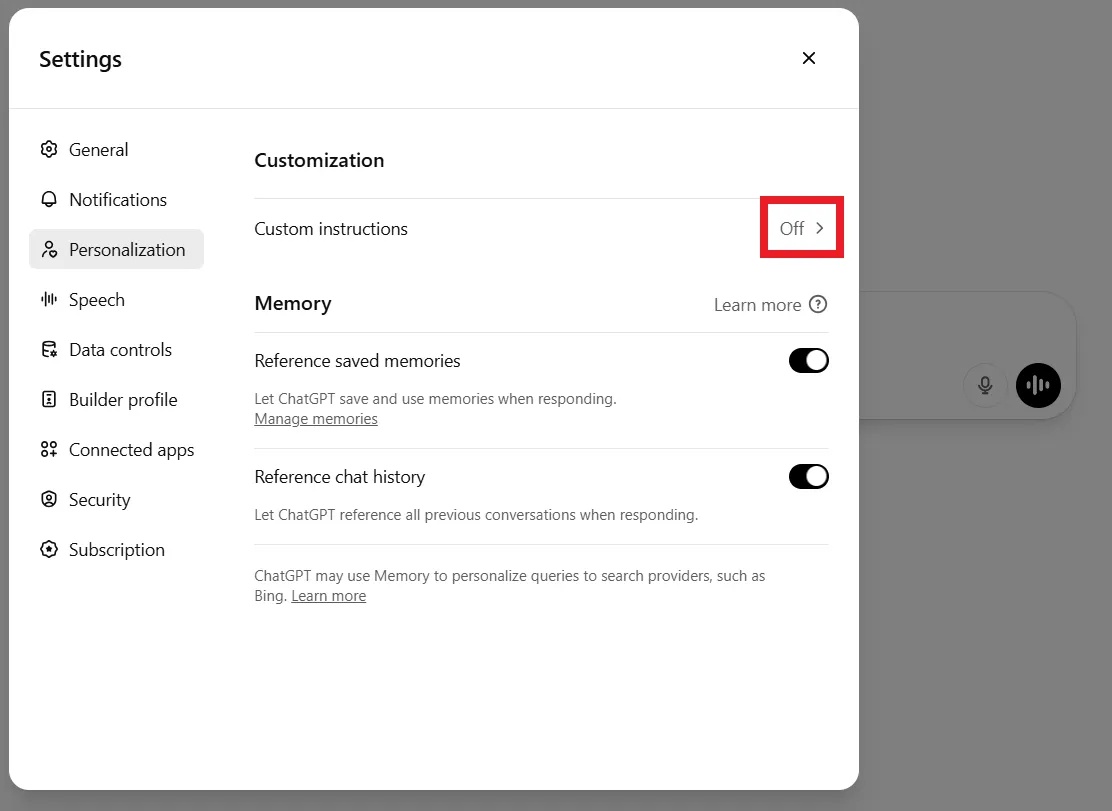

Meanwhile, some users didn’t wait for OpenAI to fix the bug. Many said they canceled their paid subscriptions out of frustration. Others shared ways to make the chatbot “less flattering,” such as customizing it, adding commands, or personalizing it through the Settings section of the Customization section.

|

Users can ask ChatGPT to stop giving compliments in a command or in the personalization settings. Photo: DeCrypt. |

For example, when starting a new conversation, you can tell ChatGPT: “I dislike empty flattery and appreciate neutral, objective feedback. Please refrain from giving unnecessary compliments. Please save this in your memory.”

In fact, “sycophantic” is not an accidental design flaw. OpenAI itself has admitted that the “overly polite, over-compliant” personality was an intentional design bias from the early stages to ensure that chatbots are “harmless,” “helpful,” and “approachable.”

In a March 2023 interview with Lex Fridman, Sam Altman shared that the initial refinement of GPT models was to ensure they were “useful and harmless,” which in turn created a reflex to always be humble and avoid confrontation.

Human-labeled training data also tends to reward polite and positive responses, creating a bias toward flattery, according to DeCrypt .

Source: https://znews.vn/tat-ninh-hot-ky-la-cua-chatgpt-post1549776.html

![[Photo] General Secretary To Lam receives Sri Lankan President Anura Kumara Dissanayaka](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/4/75feee4ea0c14825819a8b7ad25518d8)

![[Photo] Vietnam shines at Paris International Fair 2025 with cultural and culinary colors](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/5/4/74b16c2a197a42eb97597414009d4eb8)

![[Video]. Building OCOP products based on local strengths](https://vphoto.vietnam.vn/thumb/402x226/vietnam/resource/IMAGE/2025/5/3/61677e8b3a364110b271e7b15ed91b3f)

Comment (0)