Scientists have developed a brain-computer interface that can capture and decode a person's inner monologue.

The findings could help people who cannot speak communicate more easily with others. Unlike some previous systems, the new brain-computer interface does not require users to try to speak words. Instead, they just have to think about what they want to say.

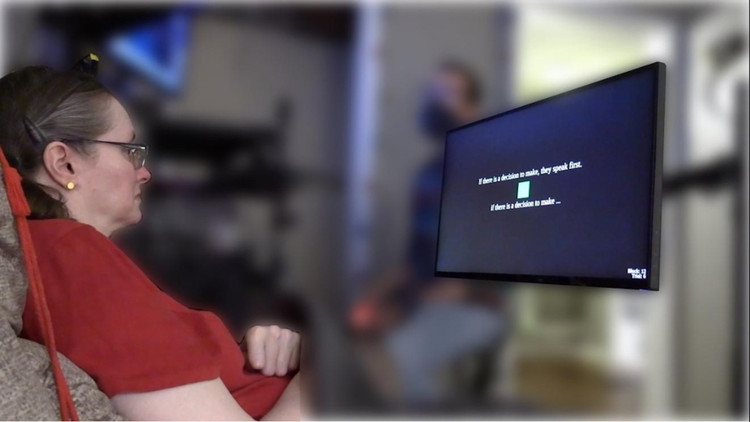

A participant in a brain-computer interface experiment decodes inner thoughts. Photo: Emory BrainGate

"This is the first time we've been able to understand what brain activity looks like when you're just thinking about speaking," said study co-author Erin Kunz, an electrical engineer at Stanford University. "For people with severe speech and motor impairments, an interface that can decode inner speech could help them communicate much more easily and naturally."

Brain-computer interfaces (BCIs) are being developed that allow paralyzed people to use their thoughts to control assistive devices, such as prosthetic limbs, or communicate with others.

Some systems involve implanting electrodes into a patient's brain, while others use MRI to observe brain activity and correlate it with thoughts or actions.

However, many BCIs that aid communication require the user to strain to speak in order to articulate what they want to say, a process that can be tiring for people with limited muscle control. The researchers in this new study wondered if they could replace it with decoding internal speech.

In the new study, published August 14 in the journal Cells, Erin Kunz and colleagues worked with four people who were paralyzed by stroke or amyotrophic lateral sclerosis (ALS), a degenerative disease that affects nerve cells that help control muscles.

Brain-computer interfaces are being developed that could “unmask” the difference between thoughts and words. Photo: Camtree Launches

Participants had electrodes implanted in their brains as part of a clinical trial to control assistive devices with their thoughts. Researchers trained the AI using models to decode internal thoughts and what the patient was trying to say from electrical signals picked up by electrodes in the participants' brains.

The team found that the models decoded sentences that participants were “thinking” in their heads with up to 74% accuracy. They also recognized a person’s natural inner speech in tasks that required it, such as remembering the order of a series of arrows pointing in different directions.

Inner speech, or more precisely inner thought, and speech both produce similar patterns of brain activity in the motor cortex, which controls movement, but inner speech produces generally weaker activity.

One ethical issue with BCIs is that they have the potential to decipher a user’s private thoughts rather than what they intend to say. The discrepancy in brain signals between speech and inner thoughts suggests that future brain-computer interfaces could read a person’s mind.

To further protect the current system from accidentally decoding a person's inner speech, the team developed a password-protected BCI.

Participants could use the test speech to communicate at any time, but the interface only began decoding the inner speech after they silently recited the passphrase "chitty chitty bang bang".

“Although BCIs cannot decode complete sentences when a person is not thinking clearly in words, advanced devices may be able to do so in the future,” the researchers wrote in the journal Cell.

Source: https://khoahocdoisong.vn/giao-dien-nao-may-tinh-doc-duoc-ca-tam-tri-nguoi-dung-post2149047131.html

![[Photo] Politburo works with Standing Committees of Lang Son and Bac Ninh Provincial Party Committees](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/8/20/0666629afb39421d8e1bd8922a0537e6)

![[Photo] An Phu intersection project connecting Ho Chi Minh City-Long Thanh-Dau Giay expressway behind schedule](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/8/21/1ad80e9dd8944150bb72e6c49ecc7e08)

![[Photo] Prime Minister Pham Minh Chinh receives Australian Foreign Minister Penny Wong](https://vstatic.vietnam.vn/vietnam/resource/IMAGE/2025/8/20/f5d413a946444bd2be288d6b700afc33)

Comment (0)